Statistically, it is quite rare for hardware failure to be the source of an outage that impacts application availability. Most are caused by misunderstandings of logical dependencies during maintenance. A good way to prevent this impact is to have a completely separate Outposts Rack deployment that is tied to a different parent region. These twin deployments can, and often do, reside physically within the same site – although the diversity of utility providers such as power and internet connectivity should be maintained between them:

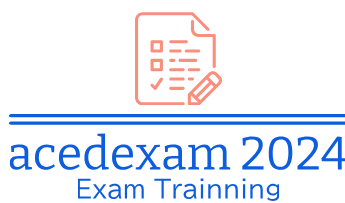

Figure 5.19 – Communication path restrictions for two deployments in the same VPC

If customers choose to take this approach, they must take the following restrictions into account:

Network traffic from resources on two Outposts cannot traverse the AWS region (see Figure 5.19)

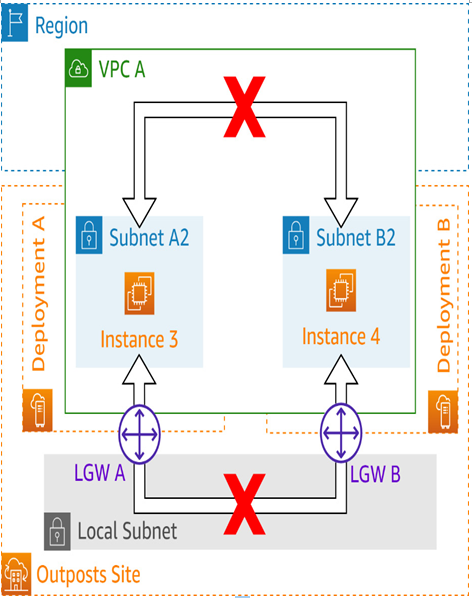

Network traffic from resources on two Outposts cannot traverse the customer network unless the resources are on different VPCs (see Figure 5.20):

Figure 5.20 – Available communication paths for deployments in different VPCs

In either case, all Outpost-to-Outpost traffic is blocked if it traverses the region. This block is in place to prevent redundant network egress charges from both the originating AWS Outposts Rack deployment and from the region.

Local VPC CIDR routes will always direct traffic between resources in the same VPC through the region and therefore will not be allowed because of this block. Due to this limitation, traffic between two AWS Outposts Rack deployments is only allowed to traverse the customer’s local network via the LGWs, and even then, only when separate VPCs are in use.

Security

AWS Outposts builds upon the AWS Nitro System to provide customers with enhanced security mechanisms that protect, monitor, and verify your Outpost’s instance hardware and firmware. This technology allows resources needed for virtualization to be offloaded to dedicated software and hardware, which provides an additional layer of security through the reduction of the attack surface. The Nitro System’s security model prohibits admin access to eliminate the possibility of human error or bad-actor tampering.

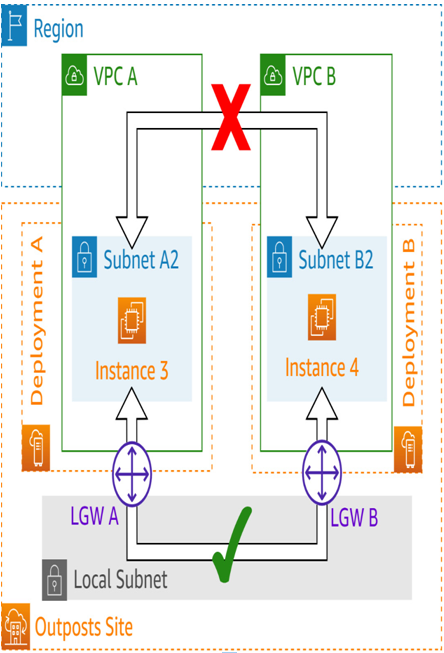

Similarly, the traditional AWS Shared Responsibility Model is also built upon by AWS Outposts but updated to fit the service’s on-premises aspects. In the following figure, take note of how the dividing line for a customer’s responsibilities shifts to cover additional responsibilities. Examples include elements such as physical security and access control, environmental concerns, network redundancy, and capacity management:

Figure 5.21 – Shared security model modified for AWS Outposts

The EBS volumes and snapshots that are used within an AWS Outposts Rack deployment are encrypted by default using AWS Key Management Service (KMS) keys. Similarly, all data transmitted between a customer’s deployment and the region is encrypted. Customers are responsible for encrypting data in transit between the LGW and its attached local network using protocols such as Transport Layer Security (TLS).

A primary reason that disconnecting the service link from the region is problematic is the way such communications are encrypted. All communications to an AWS Outposts deployment must be signed with an IAM principal’s access key and a secret access key or be temporarily signed using the AWS Security Token Service (AWS STS). Furthermore, the publication of VPC flow logs to CloudWatch, S3, or Guard Duty will cease to function properly during disconnected events.

A local security feature the Nitro hypervisor provides is the automatic scrubbing of EC2 instance resources after use. This includes all memory allocated to an EC2 instance by setting each bit to zero whenever it is stopped. When it is terminated, a similar wipe of its attached block storage also occurs.