When an Outposts rack requires physical maintenance or hardware replacement, AWS works with the customer to schedule AWS personnel to come to the facility and replace the hardware. Customers can use the provided Nitro Security Key to cryptographically shred data according to NIST standards before returning it to AWS.

Logical networking

Now that we’ve covered the physical networking elements, let’s shift focus to the logical layer. This is where we will introduce key AWS networking concepts unique to AWS Outposts Rack.

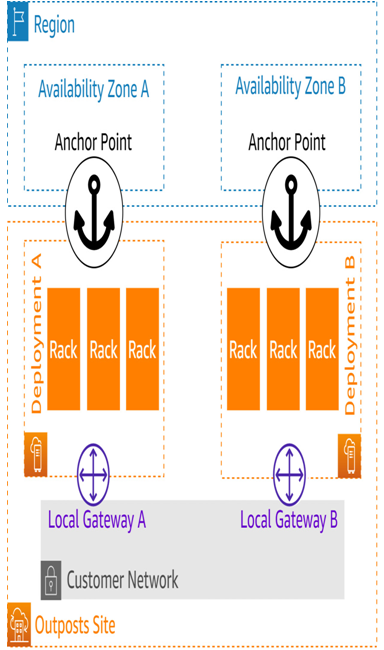

Extending an AWS region

AWS Outposts racks offer customers the ability to seamlessly extend their existing Amazon Virtual Private Clouds (VPCs) into their AWS Outposts deployment. From the perspective of an EC2 instance in a subnet on AWS Outposts, things are no different than if it had been launched in-region. It can route to instances within the same VPC just like two instances in different AZs would. Constructs defined on a per-VPC basis such as security groups, routing tables, or DHCP options can be leveraged normally. In this sense, an Outposts rack deployment can be thought of as a little piece of an AWS region that only you have access to.

Service link

Imagine taking a rack from an AWS data center and installing it in your on-premises data center. You would need to somehow allow the compute and storage components within the rack to communicate with the management and control plane elements within the region you took it from. Because it is not practical to run a pair of really long Ethernet cables, something else must perform this function. This is something AWS calls a service link:

Figure 5.4 – Service link mapping to an AZ in the parent region

There is only one service link per deployment in a logical sense, although redundancy at the physical layer is crucial. The throughput required for a given deployment’s service link is determined by many factors, some of which include the following:

Sizes of Amazon Machine Images (AMI) used

Amount of VPC traffic to the AWS region needed

Whether the customer needs to size for peak or average utilization

Number of racks in the deployment

Configuration of the compute capacity/physical servers within those racks

The service link is implemented as a series of VPN tunnels that segment the intra-VPC traffic from the management traffic between the AWS region and the Outpost. Ideally, these tunnels are mapped to separate VLANs at layer 2 to simplify troubleshooting, but this is not required.

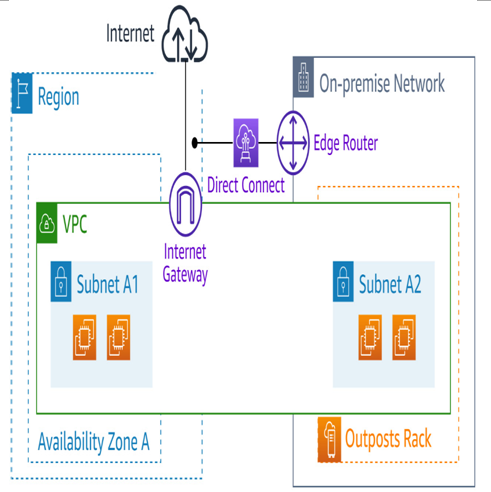

Customers can create specific routes in their service link VLAN or use the quad-zero (0.0.0.0/0) route via one of two options. First, we have AWS Direct Connect’s public virtual interface (VIF), which terminates on the AWS region’s public IP space:

Figure 5.5 – Service link utilizing an AWS Direct Connect public VIF

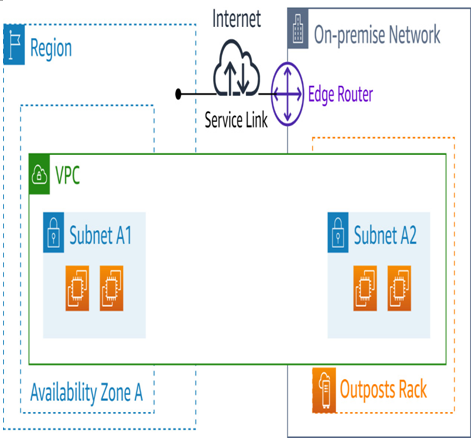

Then, we have the over the public internet approach. It is straightforward because the service link VPN tunnels are instantiated outbound from the AWS Outposts rack deployment to the associated region:

Figure 5.6 – Service link over the public internet with no AWS Direct Connect

The following table shows what ports are required for the service link:

| Protocol | Source Port | Source Address | Destination Port | Destination |

| UDP | 443 | Outpost service link /26 | 443 | Parent region’s public IP space |

| TCP | 1025-65535 | Outpost service link /26 | 443 | Parent region’s public IP space |

Figure 5.7 – Ports required for the service link

Once this connection has been established, all traffic between the associated VPCs and the AWS Outposts Rack deployment flows through it. This includes such things as customer data plane traffic, as well as all management traffic, such as firmware/software updates, control plane traffic, and internal resource monitoring flows. The only traffic that does not go over the service link is that which is bound for the LGW.